Introduction

React and Next.js have powered some of the web’s most ambitious projects in the last few years. In this period, teams have pushed the envelope on performance (achieving dramatic gains in Core Web Vitals like LCP and the new INP metric), balanced server-side and client-side rendering trade-offs, devised clever caching and state management schemes, and improved both developer and user experience.

This write-up dives into real-world case studies from engineering teams and products, highlighting their challenges, solutions, and results. We first explore individual case studies in detail — each illustrating advanced techniques in production React/Next.js apps — then distill overarching takeaways and best practices shaping the industry.

Featured Case Studies

- Vio: Profiling and optimizing INP for a React application

- DoorDash: Migrating from CSR to Next.js SSR for speed and SEO

- Preply: Boosting INP responsiveness without React Server Components

- GeekyAnts: Upgrading to Next.js 13 and RSC for a “blazing-fast” website

- Inngest: Adopting Next.js App Router for improved developer experience and instant UX

For more lessons on building large-scale web apps, you may be interested in Building Large Scale Web Apps | A React Field Guide or Success at Scale.

Vio — Profiling and Optimizing INP for a React App

Vio is a global accommodation booking platform that connects travelers with hotels, vacation rentals, and other lodging options worldwide.

Challenge

When analyzing Vio’s Core Web Vitals, the team noticed poor Interaction to Next Paint (INP) scores, indicating user interactions were taking too long to process. The INP value was around 380ms - well above Google’s ‘good’ threshold of 200ms. This delay was particularly noticeable during a crucial initial-click interaction. With INP becoming an official Google ranking factor in March 2024, improving this metric was essential for user experience and SEO.

Solution

The team identified and addressed the root causes of poor INP performance through careful profiling and analysis. Their solutions included:

-

Performance profiling and analysis: Using Chrome DevTools Performance panel and Lighthouse, they mapped out exactly where time was being spent during interactions. By adding custom User Timing marks and analyzing long React commits, they pinpointed key bottlenecks. This identified that browser reflow was a significant issue — layout recalculations were forcing the browser to re-render too much of the page.

-

Code-Splitting and lazy loading: The initial bundle included code for features that weren’t immediately needed. By implementing dynamic imports with

React.lazy()andSuspense, they reduced the initial JavaScript payload. This meant the browser had less code to parse and execute before becoming interactive. -

Debouncing and event optimization: Analysis showed that specific event handlers were firing too frequently and blocking the main thread. By implementing debouncing with a delay on scroll and resize handlers, they prevented excessive layout thrashing. They optimized click handlers using event delegation rather than attaching listeners to each element.

-

State management refinements: The app was re-rendering more components than necessary on state changes. Implementing

React.memo()strategically and using theuseMemohook for expensive computations eliminated unnecessary re-renders. The component state was also moved closer to where it was needed, reducing the scope of updates.

Results

These optimizations led to dramatic improvements:

- INP improved from 380ms to 175ms, well under Google’s “good” threshold of 200ms.

- Initial JavaScript payload was reduced by 60%.

- Unnecessary re-renders eliminated, with React profiler showing fewer wasted renders.

- User feedback indicated the interface felt noticeably more responsive.

The case demonstrated that methodical performance analysis and targeted optimizations could significantly improve INP without requiring a complete architectural overhaul.

Further Details

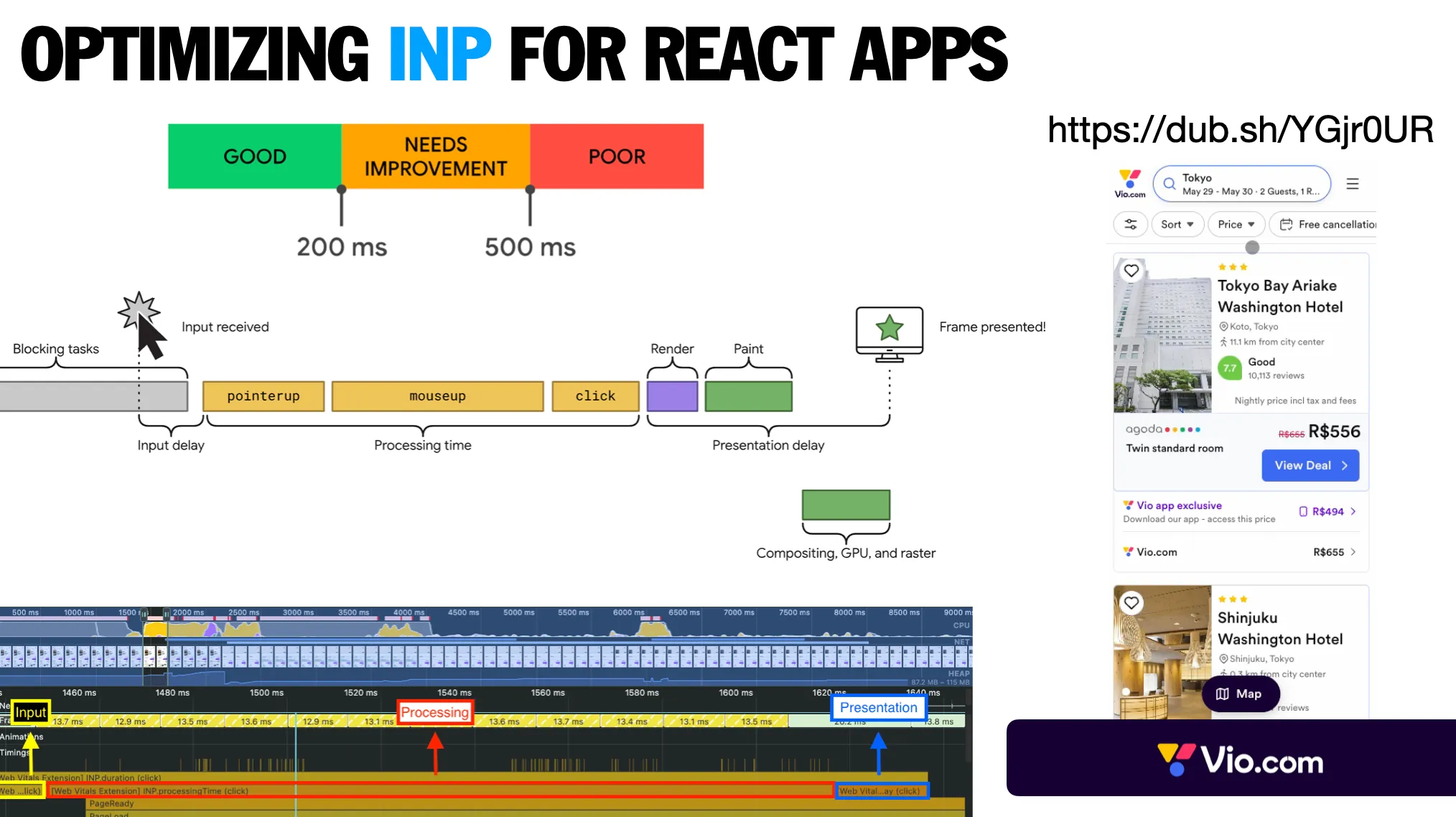

INP — Interaction to next paint is the latest interaction metric available on the web. An interaction can be a click, a keyup, or a pointer up. It is broken into 3 phases that can help target optimization efforts. Vio is a large React app that has been optimized for INP. They were able to make their app 69% faster.

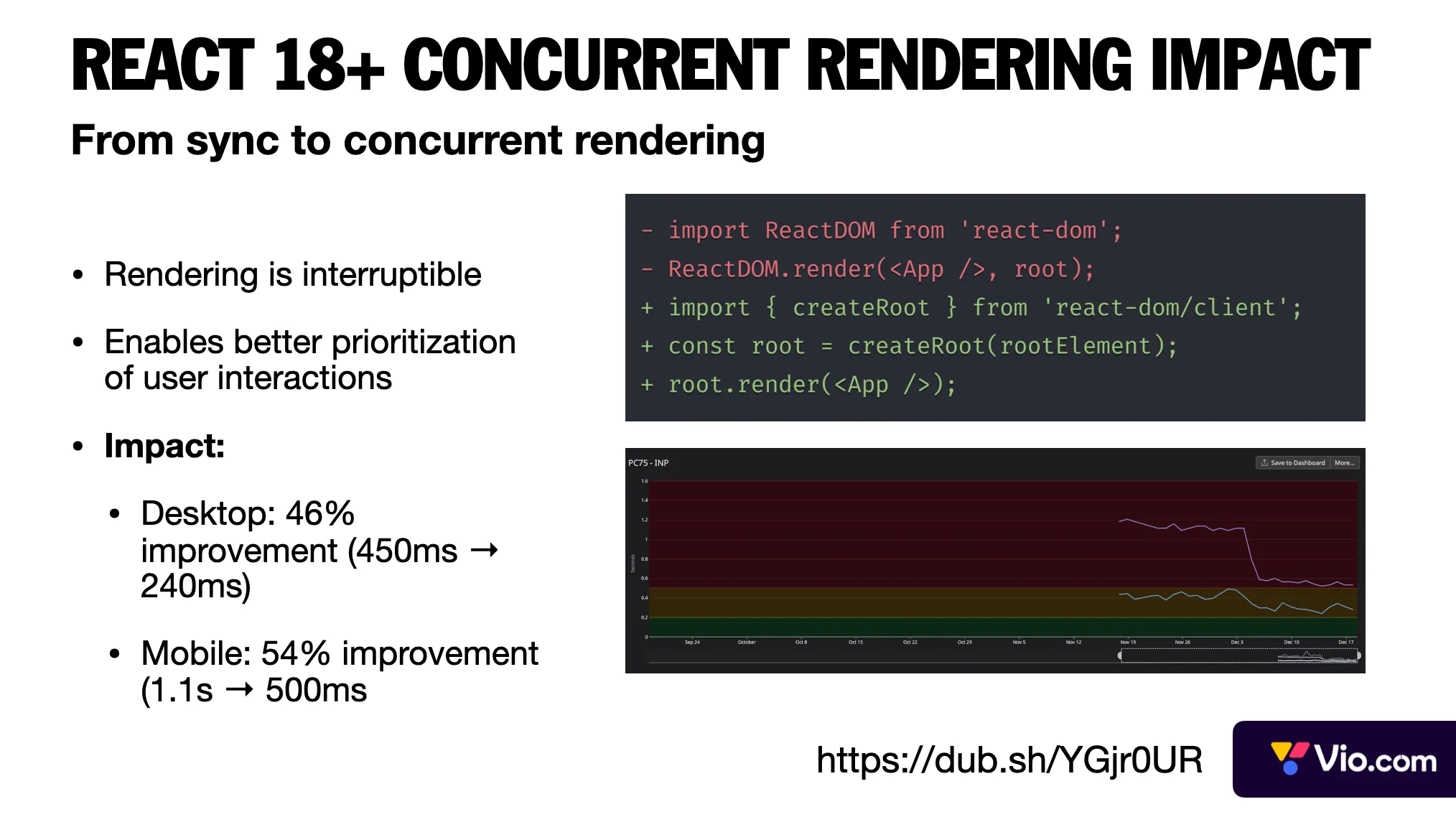

Their first big win came from upgrading to React 18. The key benefit of this update was concurrent rendering since high-priority tasks like user input can interrupt rendering. This single change improved desktop performance by 46% and mobile performance by 54%. The crucial insight is that sometimes framework-level changes can have more impact than local optimizations.

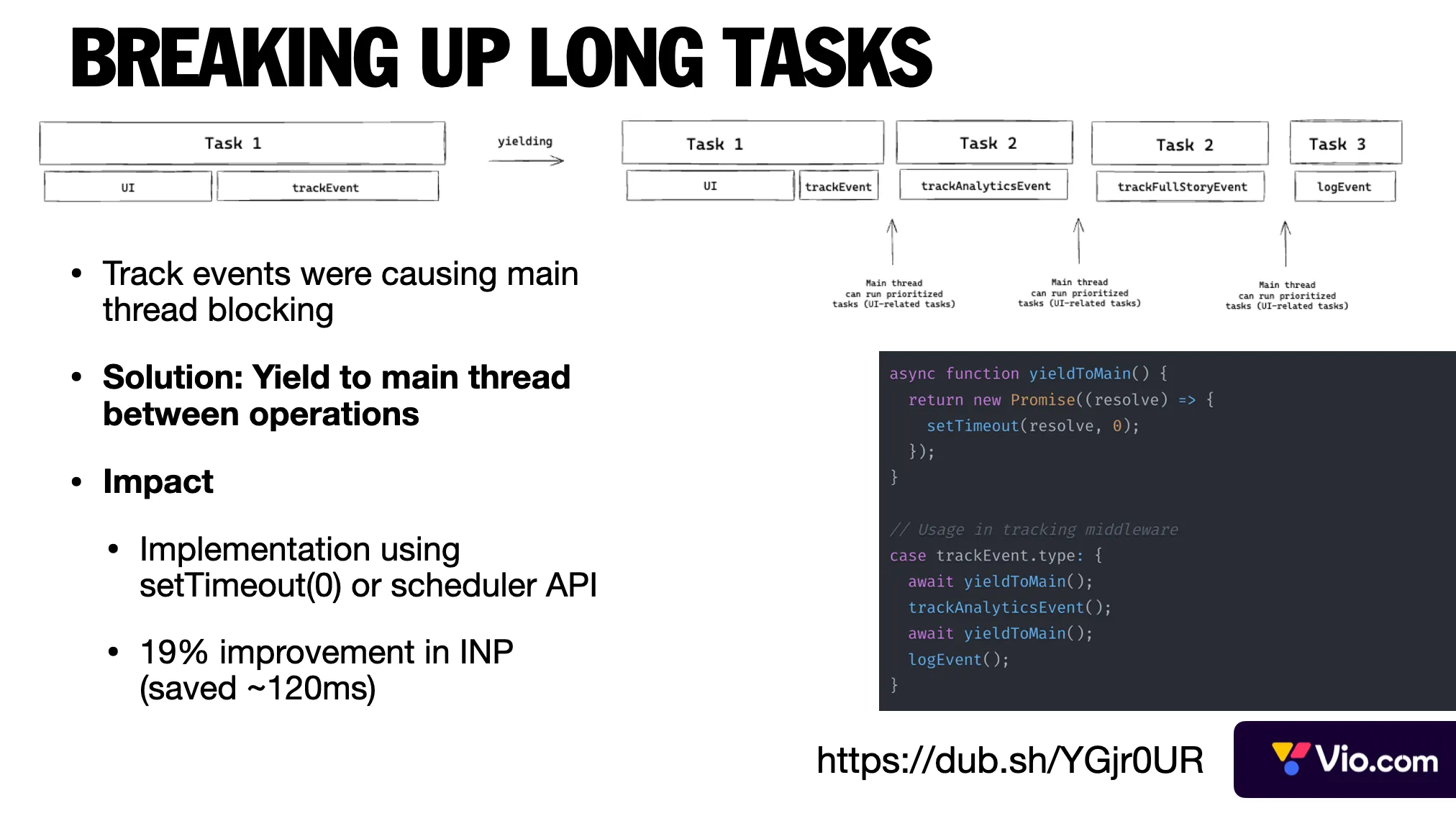

Analytics and tracking events were creating long tasks that blocked the main thread. Instead of removing or moving these to service workers, the team implemented a more straightforward solution: yielding to the main thread between operations. This broke up the app’s long tasks and improved INP by 19%. The key learning: sometimes simple solutions using basic JavaScript features can solve complex performance problems.

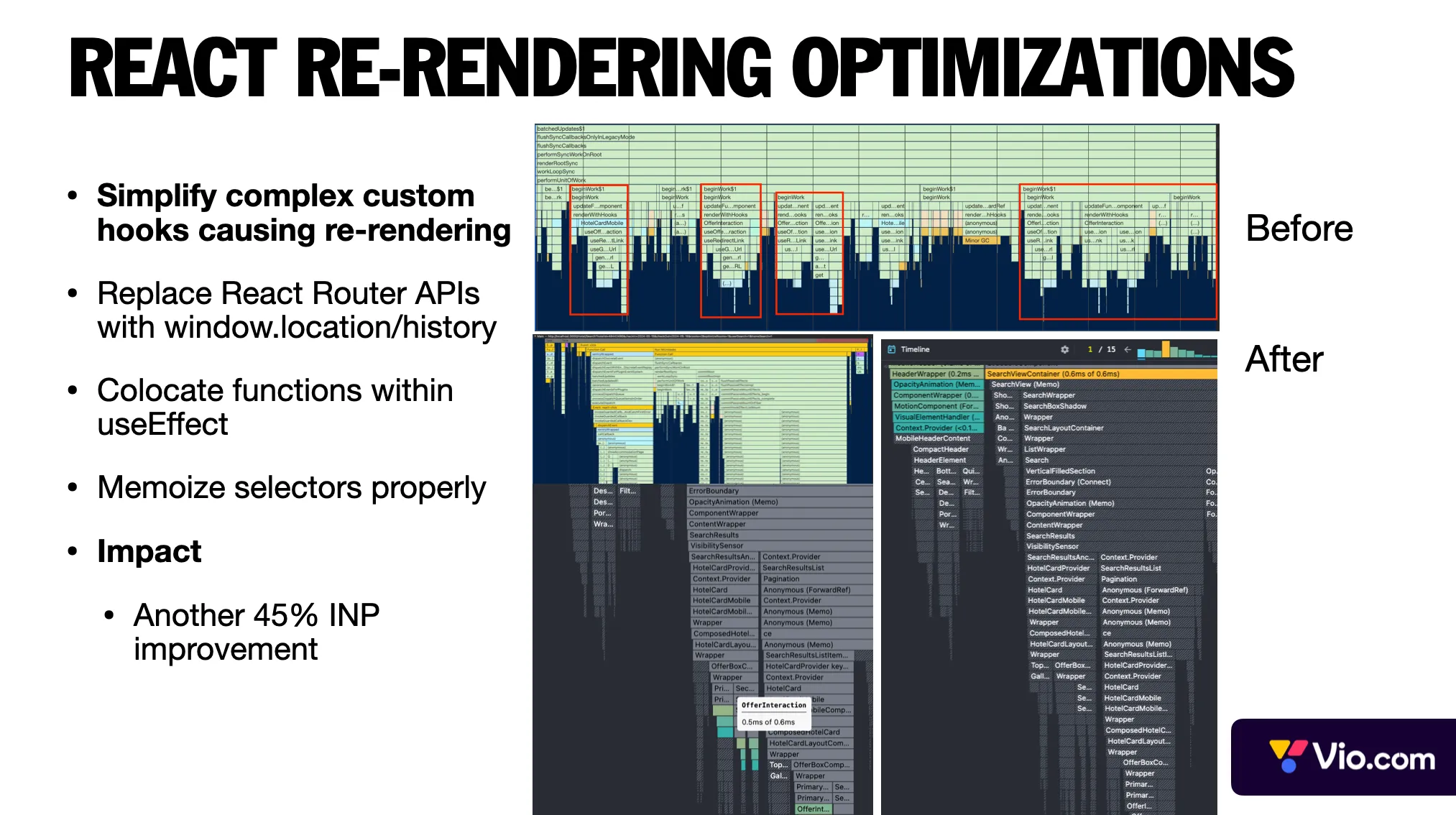

The final piece was fixing React rendering issues. The team identified and solved this problem in a few different ways, which include:

- Breaking down complex custom hooks into smaller ones. In the flame graph above, the team noticed a complex hook being called many times and re-rendering the components unnecessarily.

- Replacing React Router APIs with window.location where possible.

- Improving Redux selector memoization.

- These changes combined gave the team another 45% improvement in INP.

- The takeaway: profiling is essential - performance bottlenecks are often in unexpected places!

DoorDash – Migrating from CSR to Next.js SSR for Speed and SEO

DoorDash is a food delivery platform connecting customers with restaurants and retailers for on-demand delivery of meals and goods.

Challenge

DoorDash’s web frontend was originally a client-side rendered (CSR) single-page React app. It suffered from slow load times, bloated JS bundles, and poor SEO. As the app grew, bundle size became “difficult to optimize” and initial loads showed a blank screen until the app hydrated, degrading the user experience. With many “Poor” Core Web Vitals in Google Search Console, DoorDash needed to improve page speed for critical pages (homepage and store pages) to enhance UX and search rankings.

Solution

The team incrementally migrated pages to Next.js for server-side rendering (SSR). Rather than a complete rewrite, they adopted a page-by-page incremental rollout, running the old CSR app and new Next.js SSR pages side by side. This approach let them modernize gradually and minimize complete rewrites while allowing features to co-exist. Key tactics included:

- SSR with lazy hydration: Using Next.js, pages are rendered to HTML on the server for a fast, contentful first paint. To avoid heavy server computation delaying Time to First Byte, the team refactored and lazily loaded non-critical components during SSR. By deferring some components, they reduced server-side work and delivered initial HTML faster (mitigating SSR’s CPU overhead).

- Bridging SSR and CSR State: A custom context (

AppContext) was created to allow shared components to work in both environments transparently. This context provided common data (like cookies, URL) and anisSSRflag. Components could readisSSRand adjust behavior accordingly (e.g. disable client-only features during SSR). This clever state management ensured the same components could run in SSR or CSR, easing the migration. For example, analytics events were turned into no-ops on the server to prevent errors, and links would render as Next<Link>on SSR vs React Router links on CSR. - Routing and code organization: Next.js’ file-based routing replaced React Router for new pages, and the team used a “trunk-branch-leaf” strategy focusing optimization on top-level pages first. They also established a clear rollout strategy and fallback: by migrating one page at a time and having monitoring in place, they could catch issues early and revert a page if needed.

Results

The migration yielded dramatic performance improvements. DoorDash saw +12% to +15% faster page load times on key pages, and Largest Contentful Paint (LCP) improved by 65% on the homepage and 67% on store pages. The proportion of slow “Poor” LCP URLs (>4s) plummeted by 95% (source). These gains meant users got content much quicker, directly enhancing UX and likely SEO (since Google rewards good Core Web Vitals). The team emphasizes that adopting Next.js can lead to ”huge improvements in mobile web performance” when done right. Just as important, they achieved this without a big-bang rewrite – the incremental approach kept the site stable and developers productive. In summary, by moving from a heavy CSR app to SSR with Next.js, DoorDash significantly boosted speed and user experience, improving maintainability with a more modular architecture.

Preply – Boosting INP responsiveness without React Server Components

Preply is an online language-learning marketplace that connects learners and tutors.

Challenge

Preply, an online learning platform, faced an urgent performance issue on two of its most critical pages (those crucial for SEO and SEM conversion). Specifically, their Interaction to Next Paint (INP) was poor. INP – which measures responsiveness to user input – was the worst Core Web Vital for those pages, indicating users experienced lag after clicking or typing. With Google set to make INP an official ranking factor in 2024, this posed SEO risks. Moreover, the team estimated that improving INP could save ~$200k per year (likely via better ad conversion and lower bounce rates) according to their analyses on ROI. However, they had less than 3 months to deliver fixes, and their app still used the older Next.js Pages Router (no React Server Components or new App Router) – so they had to eke out improvements within the existing architecture.

Solution

Preply formed a dedicated performance strike team and attacked the problem on multiple fronts, focusing on minimizing main-thread blocking during interactions. Some key strategies included:

- Profiling and eliminating bottlenecks: Engineers collected extensive runtime data and traces for the target pages. By measuring all the synchronous JavaScript that ran after a user interaction (which contributes to INP), they pinpointed expensive operations. Many optimizations were low-level—e.g. refactoring slow loops or heavy computations, removing unnecessary re-renders, and splitting large functions into smaller asynchronous chunks. Anything that could be deferred was offloaded until after the first paint following an interaction.

- Selective hydration via React 18: Because the app was already on React 18, they leveraged concurrent React features to improve hydration and responsiveness. For example, they wrapped non-critical UI parts in

<Suspense>boundaries so that hydration of those parts became non-blocking. This meant the main thread could respond to a click without waiting for every bit of the page to hydrate. In effect, React 18’s selective hydration allowed interactive components to hydrate first, while less urgent parts hydrated in the background. This drastically cut down input delay. - Optimizing Event Handling with Transitions: The team audited event handlers attached to UI elements. For interactions that triggered heavy state updates, they used

startTransition(React 18’s concurrency API) to mark those updates as low priority, allowing React to delay non-urgent updates if a high-priority event (like another user input) comes in, keeping the interface responsive. They also introduced debouncing and throttling where appropriate for events that fired frequently to avoid janking the thread. - Code-Splitting & Lazy Loading: Preply identified portions of their bundle that weren’t immediately needed for the initial interaction. By applying aggressive code-splitting, they ensured that less JavaScript was loaded up front. Some components and libraries were loaded on-demand (after user interaction or when in viewport) to lighten the load. This aligns with the maxim “load only what is necessary, when it is necessary” – a crucial principle for optimizing INP.

- Caching and CDN Optimizations: While focusing on frontend code, they didn’t neglect network optimizations. The team verified that their CDN (CloudFront) was effectively caching static assets and that their API responses for these pages were cacheable or pre-fetched. In past efforts, Preply had reduced latency from 1.5s to 0.5s by using AWS CloudFront and ElastiCache, so they made sure to apply similar caching for any new server endpoints involved in these pages. This ensured the browser wasn’t stuck waiting on slow network responses during an interaction.

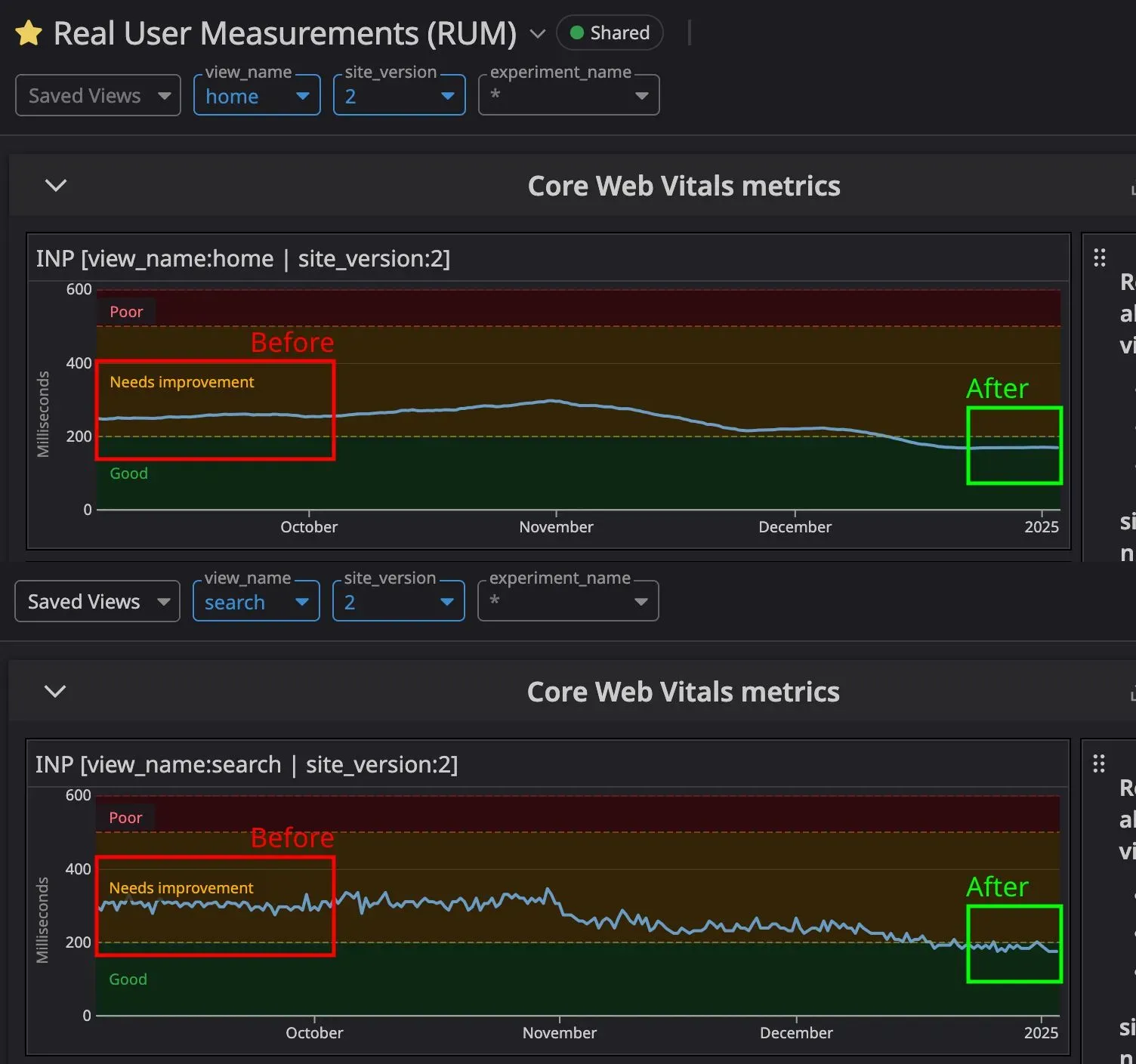

Results

In just a few months, Preply drastically improved INP on the targeted pages, bringing them into compliance with the “good” threshold of under 200 ms. Qualitatively, the pages became far more responsive: test users reported that clicks and inputs felt almost instant, where previously there was a perceptible delay. Importantly, these gains directly translated into business metrics. With a faster, more responsive interface, Preply likely saw increased conversion on those SEO landing pages (users not bouncing due to lag). The estimated $200k/year savings from better performance was a strong validation of the effort. Perhaps most impressively, Preply achieved this without adopting React Server Components or a full App Router refactor – proving that even legacy Next.js projects can be significantly optimized with focused effort. This case underscored the value of measuring real user interactions and doing whatever it takes to make every click snappy.

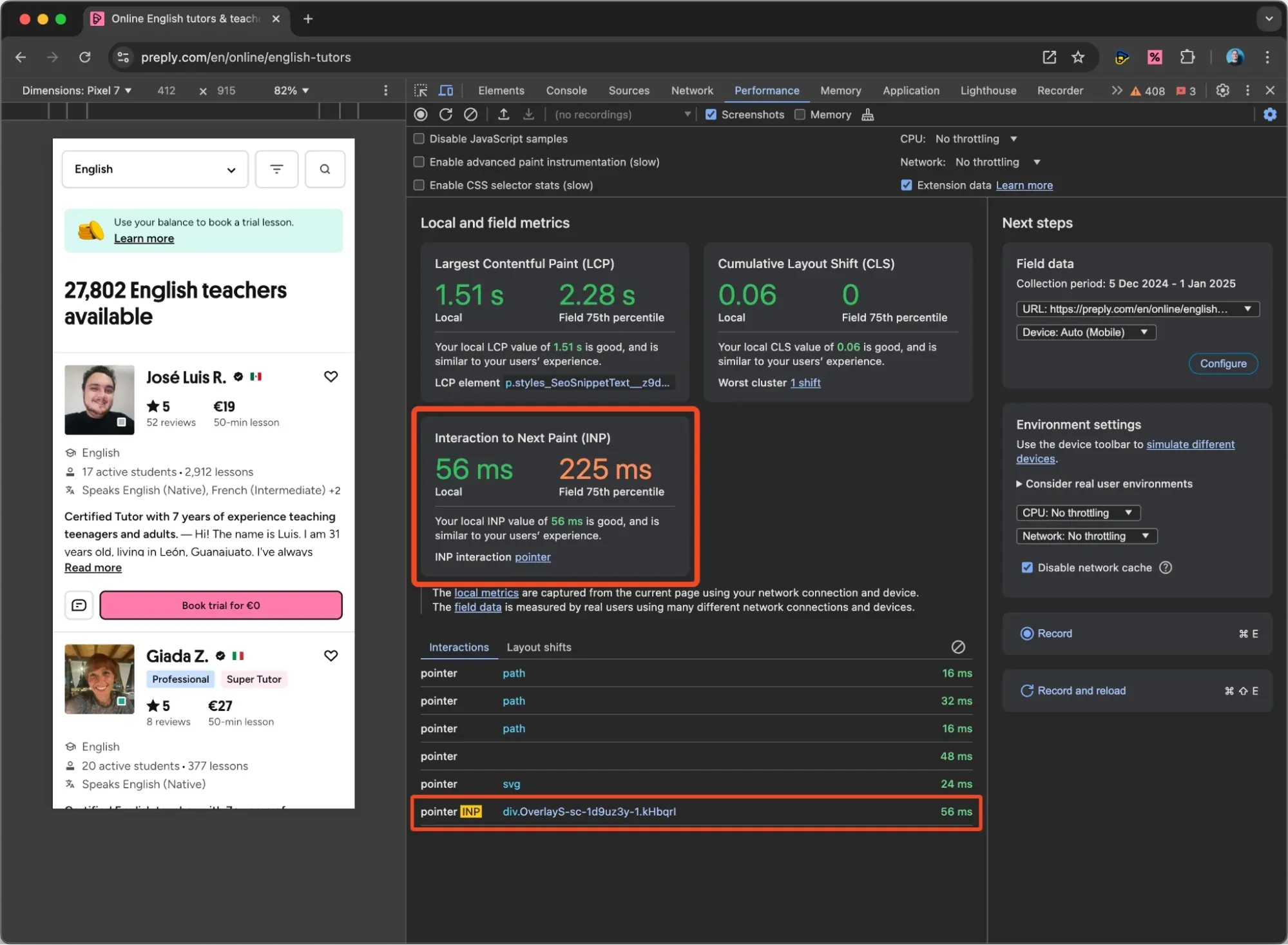

Chrome Devtools’s Performance tab shows the Core Web Vitals for the current session, especially INP, and the slowest interaction that caused it.

Their internal dashboard reports the INP for the Home page (~250 ms before the optimizations, 185 ms after the optimizations) and the Search page (~250 ms before the optimizations, 175 ms after the optimizations).

GeekyAnts – Upgrading to Next.js 13 and RSC for a “Blazing-Fast” Website

GeekyAnts is a product development studio and consultancy that provides software development and consulting services to businesses.

Challenge

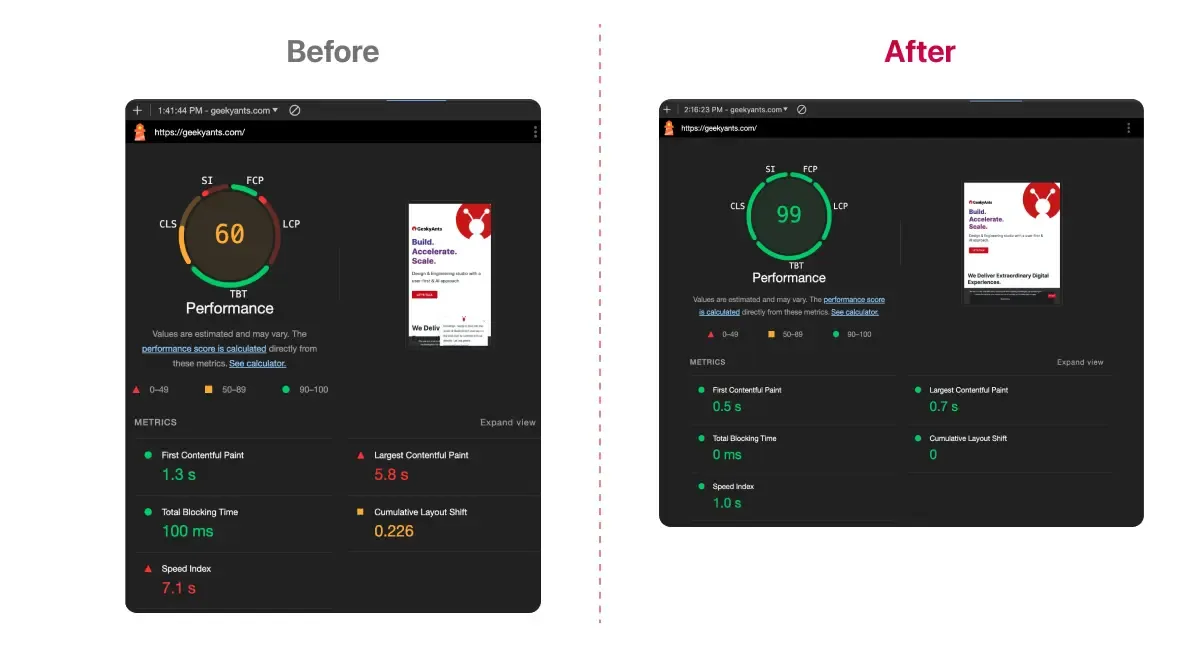

GeekyAnts, a tech consultancy, wanted to improve its corporate website’s performance and user experience. Their landing pages were content-rich (images, media, and info) and were lagging with Lighthouse performance scores around 50 – clearly suboptimal. The low page speed was affecting SEO rankings and user engagement. They identified large client-side JavaScript payloads and inefficient data loading as key issues. To turn this around, GeekyAnts decided on a bold step: upgrade their site to Next.js 13 (from an older Next.js version) and leverage the brand-new React Server Components (RSC) feature. This was a cutting-edge move in 2024, intended to reduce client-side work and speed up rendering. The challenge lay in migrating to a new app structure and mental model while keeping the site functional and SEO-friendly.

Solution

The GeekyAnts team undertook a comprehensive overhaul, rebuilding their site on Next 13’s App Router with RSC. Their process included:

- Adopting React Server Components: With RSC, they moved a significant amount of UI rendering to the server. Components that did not require interactivity were converted to server components, meaning the HTML for those parts was generated at request time and sent down (no client-side React needed). This “reduced the need for client-side processing, making the site respond faster”, since less JavaScript had to run in the browser. In practice, the team found this meant a lot fewer bytes of JS shipped, cutting the main-thread execution time and allowing pages to become interactive much sooner. (In an illustrative example, they noted that with RSC the React component tree can be “selective” – not every element becomes a client-side component, only those truly needed on the client.)

- Reworking Data Fetching to the Server: Previously, certain data for their pages might have been fetched on the client-side or via inefficient APIs. The Next 13 App Router encouraged a shift to server-side data fetching using async components or

getServerSidePropsequivalents in RSC. GeekyAnts reorganized how their pages load data, moving API calls into server components so that data is already present when the HTML is sent. This eliminated round-trip delays after initial load. They had to ensure their APIs or database queries run efficiently on the server, but the payoff was big: the user perceives a faster load since the content arrives pre-rendered. - Leveraging Next.js Performance Optimizations: By upgrading, they benefited from Next.js 13’s defaults – automatic code-splitting, improved image optimizations, and the new routing and streaming capabilities. They used Next’s built-in Image component (or similar techniques) to optimize images and serve them in modern formats. They also likely enabled streaming SSR, splitting the HTML into chunks so that above-the-fold content could stream to the browser while heavier below-the-fold components rendered a moment later. (This streaming capability is part of the App Router and RSC paradigm and helps Time to First Byte and First Contentful Paint.)

- Testing and Tuning: After the upgrade, the team didn’t stop at “it works” – they measured Lighthouse, PageSpeed Insights, and Core Web Vitals. They fine-tuned things like using the

use clientdirective only where needed (to keep most components server-side) and checked that all metrics (CLS, LCP, INP) met the good thresholds. The result was a polished, modern Next.js app.

Results:

The transformation was a success. GeekyAnts reported “health scores reached 90+” on Lighthouse/PageSpeed after the deployment, a huge jump from the ~50 before. All Core Web Vitals metrics now passed optimal standards. In side-by-side comparisons, the JavaScript execution time and main-thread work were markedly reduced thanks to RSC (as shown in their before/after charts). In short, the site became much faster and more interactive, with a near-instant load of content. SEO also improved: with faster speeds and better-structured content, their pages’ search engine “SEO health” scores improved as well. User experience was smoother – for example, navigations on the site felt more instantaneous, and heavy pages like their media-heavy landing page now load without causing long freezes.

This case study demonstrates the real-world impact of Next.js 13 and React Server Components: by shipping less JavaScript and doing more work on the server, apps can achieve dramatic performance gains. GeekyAnts did note that the transition required changes in how they handle things like API calls (shifting some logic to server), but the result justified the effort. For teams seeking a “blazing-fast” UX, this provides a blueprint: embrace the latest framework capabilities to streamline what runs in the browser, and you can reap substantial improvements in both speed and user satisfaction.

Inngest – Adopting Next.js App Router for a Better DX and Instant UX

Inngest is a serverless workflow platform that simplifies background jobs, event-driven functions, and developer automation.

Challenge

Inngest, a SaaS platform, undertook a significant front-end redesign in 2023–2024. They moved from a Create React App (CRA) + React Router setup to Next.js 13 with the new App Router. The goals were not only performance but also developer productivity and maintainability. They wanted to eliminate the blank loading state that their old CSR app showed on initial load, improve routing and data loading, and take advantage of modern React features (server components, streaming, etc.). However, adopting the App Router early came with a learning curve, especially around its new caching behavior and routing conventions. The team faced questions like:

- How can global state (e.g., filters) be handled across the new layouts?

- How to ensure dynamic data stays fresh with Next’s caching?

- How to utilize streaming without complicating the codebase?

This case captures how Inngest navigated these challenges, yielding both UX improvements and a smoother developer experience (DX).

Solution

As they rebuilt the Inngest Dashboard with Next.js 13, the team documented key lessons and techniques:

- Static Pre-render + Streaming = Fast UX: Using Next’s static rendering where possible, the new app serves an immediate initial view instead of a blank screen. Even on pages that require dynamic data, they employed React’s streaming SSR. This allowed them to send down the shell of the page (header, nav, layout) right away, then stream in the data-driven sections as they become ready. In practice, users would see the frame of the dashboard instantly (with perhaps loaders in content areas) rather than waiting for a full client render. This allowed users to start interacting with the page sooner, greatly improving perceived performance.

- Nested Layouts & preserving State: The App Router’s nested layouts feature enabled Inngest to maintain state between navigations in ways that were hard before. They could share UI (like navigation bars or filters) across many pages without re-mounting, thus avoiding expensive re-renders when switching views. For example, a user’s selected environment filter could persist while they navigate different sections. However, they discovered a quirk: URL search params are not automatically passed to layout-level server components (to prevent stale values). Their initial approach to putting a filter

?env=in the URL and reading it in a layout failed to update on navigation. They solved this by switching to a route param (e.g./env/[env]/functions) so that the layout would pick up changes in environment as part of the path. This ensured their global filter state stayed in sync. This is a great example of adapting state management to Next’s architecture – they moved state into the URL path, wmaking it readily accessible and consistent across SSR and client transitions. - Understanding and controlling caching: The new App Router introduced two layers of caching by default – a client-side cache for route transitions and a server-side cache for data fetches. Inngest learned to be mindful of these. For truly dynamic data (like live logs/metrics), they opted to disable Next’s default caching by using

export const dynamic = "force-dynamic"on those pages. This ensured they weren’t unintentionally serving stale data from a previous request. They also embraced Next’scache()andrevalidateoptions on fetches where appropriate to fine-tune freshness. By incrementally adopting caching (enabling it once they understood it), they achieved a balance: quick repeat navigations thanks to caching, but no stale content surprises for rapidly changing data. - Developer Experience improvements: The App Router’s opinionated file structure and conventions became a boon for the team’s DX. They could colocate components, tests, and styles with the route segments, making the project easier to navigate. The special files (

layout.js,page.js,loading.js,error.jsetc.) allowed them to declare React Suspense boundaries and error boundaries right in the file tree. This made it immediately clear where loading states are handled and where errors are caught, just by looking at the folders – a big improvement over the old setup where such logic was buried in code. Inngest’s developers found this encouraged cleaner code and better separation of concerns. Next 13’s built-in support for things like middleware (used for auth redirects) also eliminated custom code and sped up their dev workflow.

Results

After deploying the new Next.js 13 dashboard, Inngest saw immediate benefits. New users could load the app and see content faster (no more jarring white screen on first load), and interactions felt snappier thanks to streaming and intelligent caching. Internally, the team noted that onboarding new developers was easier — the file layout itself served as documentation of the app’s structure, and conventions reduced the “it works on my machine” issues. While exact performance metrics weren’t publicized, the improved page loading experience was evident: portions of each page (header, nav) render instantly, and content appears progressively. They successfully combined SSR and CSR to get the best of both: initial SSR for immediate UI and SEO, and CSR (with React hydration) to make the app feel as fluid as a SPA. The architectural decisions, such as putting global state in route params, proved effective; global filters and context persisted seamlessly across navigations without issues. In summary, by embracing Next.js’s App Router early, Inngest achieved a more performant, user-friendly app and improved developer productivity through better patterns. Their lessons learned have become valuable guidance for other teams migrating to Next.js’s new paradigm.

Aggregated takeaways and best practices (2022–2025)

Each of the case studies we’ve covered provides unique insights, but together they reveal clear trends and best practices for advanced React and Next.js applications:

1. Performance optimization is paramount

Improving web performance has been a consistent theme, with Core Web Vitals (CWV) as the yardstick. Teams that invested in performance saw technical gains and business benefits (higher SEO rankings, user retention, and conversion rates). Common strategies included:

- Optimizing Core Web Vitals: Largest Contentful Paint (LCP) and Interaction to Next Paint (INP) were key focus areas by 2024. Projects achieved huge CVW gains through techniques like SSR/SSG for faster initial paint and main-thread scheduling improvements for responsiveness. For instance, DoorDash cut LCP by ~65% and virtually eliminated slow-loading URLs. Preply similarly slashed INP delays, bringing interactions under the magic 200ms mark for “good” responsiveness. These improvements often led to tangible outcomes like a boost in search impressions and traffic (one site saw +35k monthly Google impressions after fixing CWVs). The takeaway: Measure, target the worst CWV metrics, and address them methodically. Even small reductions in render or interaction time (e.g., shaving 100–200 ms off INP) can move a page into the “good” zone and yield outsized benefits in user engagement.

- Reducing JavaScript payload: A recurrent best practice is minimizing JS sent to the browser – “reduce code on the client to a minimum”. Less script means faster parsing, less blocking, and quicker interactivity. All our case studies pursued this: Next.js 13’s RSC helped GeekyAnts send dramatically less JS (no need to hydrate content that can be static); Preply aggressively code-split and pruned unused code; DoorDash trimmed a bloated SPA bundle by migrating portions to server-side. Many teams also embraced tree-shaking and bundler optimizations. The before-and-after charts often show a steep drop in JS execution time after such refactors.

- Main thread work and INP: With Google prioritizing INP in 2024, teams learned to break up long tasks and yield to user input. React 18’s concurrency (Transitions, Suspense) has become a go-to tool – making hydration and state updates interruptible so the UI remains responsive. Additionally, profiling often reveals unexpected bottlenecks (e.g., a heavy third-party script or an inefficient loop). Teams routinely use Performance Profilers to pinpoint slow code and then optimize or eliminate it. A mantra emerged: “long tasks are the enemy of INP” – so split them, defer them (e.g. via

requestIdleCallbackor web workers), or remove them entirely.

2. SSR vs CSR: Striking the right balance

The debate between server-side rendering (SSR) and client-side rendering (CSR) has evolved into nuanced hybrid approaches. There is no one-size-fits-all – the best solution often combines both. Key insights include:

- SSR for initial load and SEO: Server-rendering page content (or pre-generating it statically) is invaluable for first-paint speed, SEO, and ensuring even users with slow devices/connections get something quickly. All case studies that adopted SSR saw a better first-view experience (DoorDash’s SSR pages loaded significantly faster than the old CSR ones, and Inngest eliminated the blank screen by rendering on the server). SSR also ensures content is indexable and users without JS or with assistive tech can access the basic page. The general practice is to use SSR/SSG for entry points, content-heavy pages, or need to rank on search engines.

- CSR for Interactivity and Rich UI: Pure client-side rendering still has an important role in highly interactive portions of an app. Especially for internal dashboards or apps where SEO is irrelevant, CSR can provide snappier feeling interactions after the initial load. A noteworthy lesson was shared on one project where switching certain interactions from SSR to CSR improved the user experience: After moving some data fetching from SSR to client-side, the app “felt way more performant” as tabs updated immediately on click (with a loading placeholder) instead of making the user wait for a server round-trip. This highlights that SSR can introduce latency in user actions if not carefully handled. Teams are increasingly doing SSR for the shell and initial data, then relying on CSR for subsequent in-page updates or navigations (often with APIs called via React Query or similar). This hybrid approach gives the “SPA-like” fluidity users expect, while retaining the SEO and first-load benefits of SSR.

- Progressive Hydration & Islands: Modern frameworks (Next, and others like Astro or Qwik) encourage the idea of progressive hydration – only hydrate interactive parts and do it gradually. React Server Components (RSC) push this further by not even sending JS for non-interactive parts. The emerging best practice is to identify “islands” of interactivity and focus hydration/CSR there, leaving the rest server-rendered and static. This dramatically cuts down client work. Many teams effectively did this manually in 2022–2023 (e.g., splitting a page into components and using Next’s

dynamic()to lazy-load some on the client only when needed). By 2024, RSC automates much of this. The result is SSR and CSR working in harmony – SSR provides the content and baseline UI, and CSR enhances it where necessary (e.g., attaching event handlers, handling live updates). - Watch out for SSR Pitfalls: One trade-off with SSR is the potential for increased Time to First Byte if the server work is heavy. Developers note that “SSR doesn’t mean slow like old PHP apps” if done right, but it can be if done wrong. DoorDash encountered this and fixed it by lazy-loading non-critical components server-side. Caching rendered output at the CDN layer can also mitigate SSR overhead. Another pitfall is making too many blocking data calls during SSR – which Next’s new

useSuspenseand streaming helps avoid by allowing partial rendering. Overall, teams learned to offload or parallelize expensive work in SSR to reap its benefits without negating them via long server compute times.

Bottom line: Use SSR to “make it work” (deliver content fast, improve SEO), then “make it fast” by carefully tuning or blending in CSR where it improves the UX. Many successful projects use SSR for the page load and CSR for subsequent interactive bits – achieving an ideal balance.

3. Smart Caching Strategies for speed and freshness

Caching emerged as a critical component of performance optimization. The case studies illustrate caching at multiple levels (CDN, application, and client) to both accelerate responses and reduce load. Best practices include:

- Edge and CDN Caching: Putting a content delivery network (CDN) in front of your Next.js app and assets is now standard. For static assets (JS bundles, images, CSS), CDN caching offloads traffic from servers and brings content closer to users. Even dynamic Next pages can benefit via Incremental static regeneration (ISR) or stale-while-revalidate strategies, where a page is pre-rendered and cached for a short period. Many teams saw huge wins here: Preply’s use of Amazon CloudFront cached content globally, cutting latency from 1.5s to 0.5s and directly increasing conversions by 5%. The actionable insight: configure your CDN and caching headers carefully. Use Next’s

getStaticPropsorfetchwithrevalidateto let pages be cached and only periodically updated. Serve cacheable pages whenever possible and only fall back to SSR for truly real-time data. - Next.js App Router cache: Next 13+ introduced an automatic server-side data cache for

fetch()calls during rendering and a client-side navigation cache. This can drastically speed up navigation (avoiding refetching data for recently visited pages) and improve server performance. However, as Inngest found, understanding the invalidation rules is key. By default,fetchin RSC may cache data indefinitely unless marked otherwise. The lesson is to explicitly mark dynamic data (cache: 'no-store'ordynamic = 'force-dynamic') when needed and conversely allow caching for endpoints that don’t change often. In practice, teams combine stale-while-revalidate caching for most content (ensuring fast responses) with on-demand revalidation or disabling cache for specific data that must always be fresh. - Client-Side state caching: On the client, using libraries like React Query (TanStack Query) has become a best practice to cache API responses and avoid redundant requests. Instead of every component fetching the same data, React Query provides a shared cache – fetches of the same query are consolidated, and the data is stored for fast reuse. This approach not only improves performance but also simplifies state management (the library handles background updates and cache invalidation). Several teams have migrated from manually lifting state or using Redux for server data to using React Query or SWR. The result is snappier UIs (as data is often instantly available from the cache) and lower network chatter.

- Caching pitfalls: Developers have learned to be cautious of cache coherence and staleness. A classic mistake is caching something too aggressively and serving outdated info. The case studies show safeguards: e.g., Inngest disabling cache on certain routes until they could implement proper revalidation. The upcoming version of Next.js 15 is even adjusting defaults to make caching opt-in for easier predictability. The advice is to start with simple caching (maybe even none for dynamic data), then gradually enable it as you put in place the monitoring or hooks to update content. Also, always test with the cache on and off to ensure your app still works correctly (no hidden dependencies on fresh data).

In summary, effective caching can yield order-of-magnitude performance boosts (milliseconds instead of seconds). Use CDN and server caching to speed up deliveries globally and leverage application-level caches to avoid recomputation. Just remember to balance speed with data freshness – stale data can harm UX, so design your invalidation strategy (time-based, event-based, etc.) from the start.

4. Evolving State Management: simplify and leverage specialized Hooks

State management in large React applications has seen a shift in recent years. Whereas earlier projects might default to heavy libraries like Redux for global state, case studies from 2022–2025 show teams paring back global state and using lighter, more maintainable solutions. Key learnings:

- Don’t over-engineer global state: Many teams realized that a lot of state can be kept local or managed with basic React Context rather than a complex global store. In practice, React’s own tools plus custom hooks often suffice for most needs. As one commentary put it: “React Context API and state should cover most use cases… I’ve successfully used only context & state with no state library for years”, and one should avoid adding global state libraries unless truly needed. Especially for apps that mostly display fetched data (which can be cached), the need for a giant client state object diminished.

- Rethinking Redux: Redux, while powerful, comes with costs in complexity and bundle size. By 2023, it was common to question if Redux was necessary at all. “Adding libraries like Redux … adds complexity, boilerplate and bundle size,” one analysis noted, urging developers to reconsider using it just because it’s “cool”. Several case studies implicitly followed this advice: DoorDash managed to implement an app context for SSR/CSR without pulling in a heavy state library, and other teams used Next.js routing (as in Inngest’s case) or context to manage UI state like tabs and filters. The trend is clear: use Redux (or similar) only for truly complex, cross-cutting state that can’t be handled otherwise, and even then, consider newer alternatives.

- Rise of specialized state libraries: In place of monolithic state management, specialized libraries have gained popularity. React Query (for server data state) is a prime example. It addresses data fetching, caching, and updating in one go, which React by itself doesn’t handle well. Many teams now use React Query or Apollo Client (for GraphQL) to manage server state instead of manually storing it in global state. For client-only state (like UI toggles, form data, etc.), libraries like Zustand or Jotai provide a lightweight, flexible store with far less boilerplate than Redux. In discussions, developers often mention using Zustand for simple global state and React Query for data fetching instead of a large Redux store. This modular approach keeps the app more efficient – you only pay (in bundle size and complexity) for what you need.

- Server-State in Next.js 13: Another emerging pattern is letting the server handle more state. With React Server Components, some stateful logic can run on the server between requests. Additionally, Next.js 13’s new Server Actions (an experimental feature) allow mutations to be handled on the server seamlessly, reducing the need for complex client-side state to track form submissions, etc. While still new, this points to a future where a lot of what we consider “state management” on the client might be handled by the framework with server help. The actionable insight for developers is to stay updated on these new patterns – they can drastically simplify how much state you manage on the client.

In conclusion, simpler is often better for state management. Evaluate what truly needs to be global. Use React’s built-in capabilities and targeted libraries to handle specific kinds of state. The payoff is twofold: better performance (less JavaScript and re-renders) and better developer experience (less boilerplate and cognitive load). One team humorously noted a “skill issue” where a complex state solution wasn’t needed at all for highlighting an active tab – a 10-minute context + useState fix sufficed. The lesson: always question if there’s an easier way before reaching for heavy state tools.

5. Improved Developer Experience (DX) and maintainability

Maintaining a large React codebase can be challenging, but recent innovations and practices have markedly improved DX. Case studies highlight several approaches that keep developers productive and codebases maintainable:

- Next.js App structure & convention: Next.js’s opinionated structure (especially with the App Router) has streamlined how teams build apps. By embracing convention over configuration, teams reduced the bikeshedding over project structure and can leverage built-in behaviors. The file-based routing, nested layouts, and colocated components all lead to a more intuitive project. In Inngest’s case, just seeing the folder hierarchy tells you where things like loading states are handled. This clarity speeds up the onboarding of new devs and day-to-day navigation in the repo. The use of familiar conventions (like pages or now the app directories) across Next.js projects also means developers can transfer knowledge between projects easily.

- Tooling for fast iteration: The React ecosystem in 2022–2025 has seen big improvements in build and dev tooling. Turbopack (the new bundler replacing Webpack in Next.js) reached stability, offering ”up to 90% faster code updates” during development. Faster hot-reload times mean developers spend less time waiting and more time in flow. Additionally, better error overlays and stack traces in Next.js and CRA alike have reduced debugging time. Many teams also adopted TypeScript, which, while not covered in our cases, is now the norm and drastically cuts down on certain classes of runtime errors. The net effect is a more pleasant DX: you can iterate quickly and catch issues early.

- CI/CD and deployment improvements: Several case studies (like Preply’s AWS story) highlight automation that improved DX – e.g., going from 100-hour manual deployments to 30-minute automated ones. While not React-specific, having robust CI/CD (with tests, performance budgets, and bundle analysis) helps maintain quality at scale. Some teams set up performance testing pipelines (using tools like WebPageTest or Lighthouse CI) to catch regressions in Core Web Vitals before they hit production. Knowing that performance budgets are enforced gives developers the confidence to refactor without accidentally slowing the app.

- Embracing React primitives (Suspense, Error Boundaries): The newer patterns encourage better code architecture. Suspense for data fetching leads developers to handle loading states declaratively, resulting in less ad-hoc loading logic scattered in components. Error boundaries, now easier to configure (e.g., at layout level in Next 13), encourage graceful error handling planning rather than ignoring failures. These patterns improve maintainability – the app is resilient to slow networks or exceptions, and the code for these concerns is centralized.

- Developer education and documentation: A subtle but important trend is teams investing in learning and documenting new features. For example, Inngest wrote about their lessons with caching and routing quirks, effectively documenting gotchas for others. This culture of knowledge sharing (through engineering blogs or internal wikis) means developers don’t repeat the same mistakes and ramp up on new tech faster. Next.js and React’s rapidly evolving APIs (like Server Components) require this adaptive learning. Successful teams foster an environment where developers can play with experimental features (in a branch or small project) to understand them before deploying widely.

Key DX takeaway: modern React/Next development is getting “easier” in many respects – not because the tech is simpler, but because the frameworks abstract more and provide better defaults. By leaning into those defaults (file structure, new routing, etc.) and using faster tools, teams are shipping more with less friction. A better DX often indirectly results in better UX: developers with efficient tools can focus on polishing the user experience instead of wrestling with config or slow builds.

6. Accessibility by design

Ensuring web apps are accessible to all users (including those with disabilities) has become a non-negotiable aspect of quality. The case studies touched on it indirectly (e.g., SSR for SEO also benefits screen readers), and other industry stories provide clear guidance. Best practices that emerged include:

- Semantic HTML and proper ARIA usage: Teams like GitHub’s design systems group have emphasized using the correct semantic elements – for example, use

<button>for clickable UI, not a<div>with anonClick, so that assistive technologies recognize it as a button. When custom UI patterns (like tabbed interfaces) are needed, adding appropriate ARIA roles (e.g.,role="tablist"androle="tab") can convey structure to screen readers. The rule of thumb is to build with semantics first, adding ARIA attributes only to fill in gaps, according to the ARIA Authoring Practices Guide. In practice, many teams now include accessibility as a requirement when creating any new component. - Keyboard navigation and focus management: A common failing of web apps (especially SPAs) is poor keyboard support. Case studies of accessible component building show that developers should implement keyboard controls (e.g., arrow keys to move between tabs, Esc to close modals) and manage focus explicitly. One should ensure that when content changes or modals open, focus is directed to a sensible element and that users can tab through interactive elements in a logical order. In our research, experts advise not to remove elements from the DOM dynamically in a way that blindsided screen reader users. Instead, hide them visually or with

aria-hiddenif needed, but keep a consistent DOM for assistive tech. - Testing with assistive tech: Teams have learned to test their apps with screen readers (NVDA, VoiceOver) and keyboard-only usage as part of the QA process. Automated tools (like axe-core or Lighthouse’s accessibility audits) catch many issues (like missing alt text and low color contrast), but manual testing finds flow and usability issues. Some companies run usability sessions with users who rely on assistive tech to get real feedback. The trend is shifting left: designers and developers consider accessibility at the design stage itself, rather than retrofitting it at the end. This was explicitly noted: “Accessibility should be considered early in the design stage… rather than trying to add them later”.

- Framework support: React and Next.js themselves have taken steps to bake in accessibility. Next.js, for example, ensures that management and dynamic routing don’t break focus order by default. The Next.js documentation highlights accessibility features and encourages progressive enhancement. Additionally, many component libraries (Material UI, Chakra UI, etc.) now come with a11y in mind – teams using them get many default accessible behaviors. That said, custom components still need careful attention (as GitHub’s team demonstrated with their custom menu and tab examples).

In essence, accessible development has become standard practice in modern React apps. The payoff is not just compliance or avoiding lawsuits; accessible apps often deliver better overall UX for everyone. For example, focusing on proper headings and landmarks for screen readers also improves SEO and findability. Improving keyboard navigation often uncovers more intuitive interaction patterns that benefit all users. Thus, leading teams treat accessibility efforts as an integral part of quality, on par with performance and security.

7. User Experience enhancements and impact

Ultimately, all the technical improvements feed into one goal: a superior user experience (UX). Across these case studies, we see several UX-focused enhancements and their impact:

- Faster loads and interactions = happier users: It’s no surprise that speed is a feature. When DoorDash made their pages load 65% faster, users were able to start browsing and ordering with far less friction. Similarly, Preply’s snappy interactions likely reduced drop-offs during crucial moments (like booking a lesson). There is ample evidence that improvements of even a few hundred milliseconds can increase conversion and engagement. Users today expect near-instant responses; teams that delivered sub-100 ms interactions (via careful performance work) set themselves apart. One case noted that after performance tuning, “tabs switched immediately on click” whereas before, there was a noticeable wait – that immediacy is something users notice and appreciate.

- Seamless navigation and transitions: Beyond raw speed, how smooth the app feels during navigation contributes to UX. Features like Next.js’s built-in link prefetching make page-to-page transitions feel almost instantaneous (the resources often load before the user even clicks). With the App Router and streaming, users can interact with portions of a page while other parts are still loading, as seen in the Inngest example. This reduces the perception of waiting and keeps users engaged. Micro-interactions like skeleton screens or subtle loading indicators (enabled by Suspense boundaries) also reassure users that things are happening. The best practices here are to avoid jarring full-page reloads whenever possible and provide feedback for any action that takes more than a couple hundred milliseconds. Many teams now use skeleton UIs or spinners within components, thanks to Suspense’s easy-loading fallback mechanism. The result is a UX where data fetching is gracefully handled and doesn’t frustrate the user.

- Consistent UX across devices: SSR strategies ensure content is available even on lower-end devices or slow networks (a big win for mobile users). Meanwhile, CSR enhancements ensured rich interactions are not limited by SSR – power users on modern devices get a fluid app-like experience. For example, by mixing SSR and CSR, apps like the Inngest dashboard can accommodate both scenarios: an initial fast render for everyone and then advanced interactive features for those who can take advantage. Another aspect of UX is reliability: features like error boundaries make sure that if something goes wrong, the user sees a friendly message or fallback UI rather than a broken page. Focusing on these details (often via improved DX tools) ultimately yields a more polished, error-free experience.

- Feedback and User-Centric Metrics: Several teams have started monitoring not just technical metrics but user-centric metrics (like Time to Interactive or even user satisfaction scores). By correlating improvements (say, a faster INP) with user behavior (longer session duration, higher NPS scores), they build a case for UX work. For instance, after speeding up their site, one organization observed higher conversion and donations, tying performance work directly to user success. This holistic view ensures that performance and accessibility efforts remain focused on real user impact, not just ticking boxes.

In summary, the period from 2022 to 2025 has shown that investing in React/Next.js performance and best practices pays off in UX. Users get faster, smoother, and more accessible applications. In turn, companies see better engagement and conversion. The case studies here serve as living proof that web UX is a crucial differentiator – and that it can be continuously improved by applying the right techniques and technologies.

Conclusion & actionable insights

The advanced case studies from 2022–2025 highlight a maturation in how we build React applications. Teams are tackling performance head-on, using Next.js and modern React features to achieve substantial improvements in Core Web Vitals and overall speed. The SSR vs CSR dichotomy has softened into a pragmatic mix of both, tailored to the use-case – initial HTML when you need it and client-side interactivity when it helps. Caching is leveraged at every layer to deliver instant results without sacrificing data freshness. State management is trending lighter and more purpose-built, reducing needless complexity. Developer experience enhancements (from better frameworks to faster tooling) mean teams can ship more reliably and maintainably than before. Critically, accessibility and user experience are first-class objectives, not afterthoughts, resulting in apps that are inclusive and delightful to use.

For engineering leaders and developers, the takeaways are clear and actionable:

- Measure and optimize what matters (e.g., focus on LCP and INP if they’re hurting you; use real user metrics to guide efforts).

- Adopt Next.js and React 18+ features to handle heavy lifting (SSR, RSC, Suspense, etc.) – they can dramatically improve performance and DX when used well.

- Cache aggressively but carefully, and use CDNs – speed up repeat visits and global access while ensuring you have a strategy to update stale content.

- Keep state management simple – don’t reach for Redux or global state unless needed; consider React Query or context for most cases.

- Invest in DX tools and architecture – a well-structured project (like Next’s app directory) and fast dev cycles will pay dividends in quality.

- Bake in accessibility and UX considerations from the start – use semantic HTML, test with screen readers, and design loading states and error states proactively.

By following these practices, teams can achieve results like those in our case studies: faster apps, better engagement, easier maintenance, and satisfied users. The period covered here has been one of rapid evolution for React and Next.js in production, and the lessons learned set the stage for even more innovation and excellence in web development in the years ahead. Each improvement – whether a 65% faster load time or a 5% conversion lift – represents real users having a better experience. And ultimately, that is the true goal of any technology case study: to learn how to make things better for people who use our software every day.

Sources

- DoorDash Engineering | Improving Web Page Performance at DoorDash through Server-Side Rendering with Next.js

- Preply Engineering Blog | How Preply improved INP on a Next.js application (without React Server Components and App Router) (Feb 2025)

- Vercel | Improving Interaction to Next Paint with React 18 and Suspense

- GeekyAnts | Boosting Performance with Next.js 13 and RSC: A Case Study

- Inngest Blog | 5 Lessons Learned From Taking Next.js App Router to Production

- Reddit (/r/nextjs) discussion | Next.js SSR + Vercel = SLOW! (experiences on SSR vs CSR)

- Stackademic | React State Management 2023 (overview of state tools)

- GitNation Talk | Building Accessible Reusable React Components at GitHub by Siddharth Kshetrapal

- AWS Case Study | Preply: Raising Customer Conversion Rates with AWS (ElastiCache, CloudFront)

- SiteCare | Core Web Vitals Case Study: How a CWV Boost Saved Falling Traffic